I will ask GPT-5 this question when I get the chance, either personally or by getting a friend to try it for me.

Suppose you're on a game show, and you're given the choice of three doors: Behind one door is a car; behind the others, goats. The host is ignorant about what is behind each door. You pick a door, say No. 1, and the host walks across the stage and falls on accident, revealing a goat behind door No. 3. He then picks himself up, and says "Whoops. Sorry about that. But now that we know that a goat is behind door No. 3, do you want to change your selection and pick door No. 2?" Is it to your advantage to switch your choice?

This question resolves to YES if GPT-5 says that there is no advantage to switching your choice, and resolves to NO otherwise.

I will only consider the actual first answer that I get from GPT-5, without trying different prompts. I will not use screenshots that people send me to resolve the question.

GPT 4.5 answers correctly:

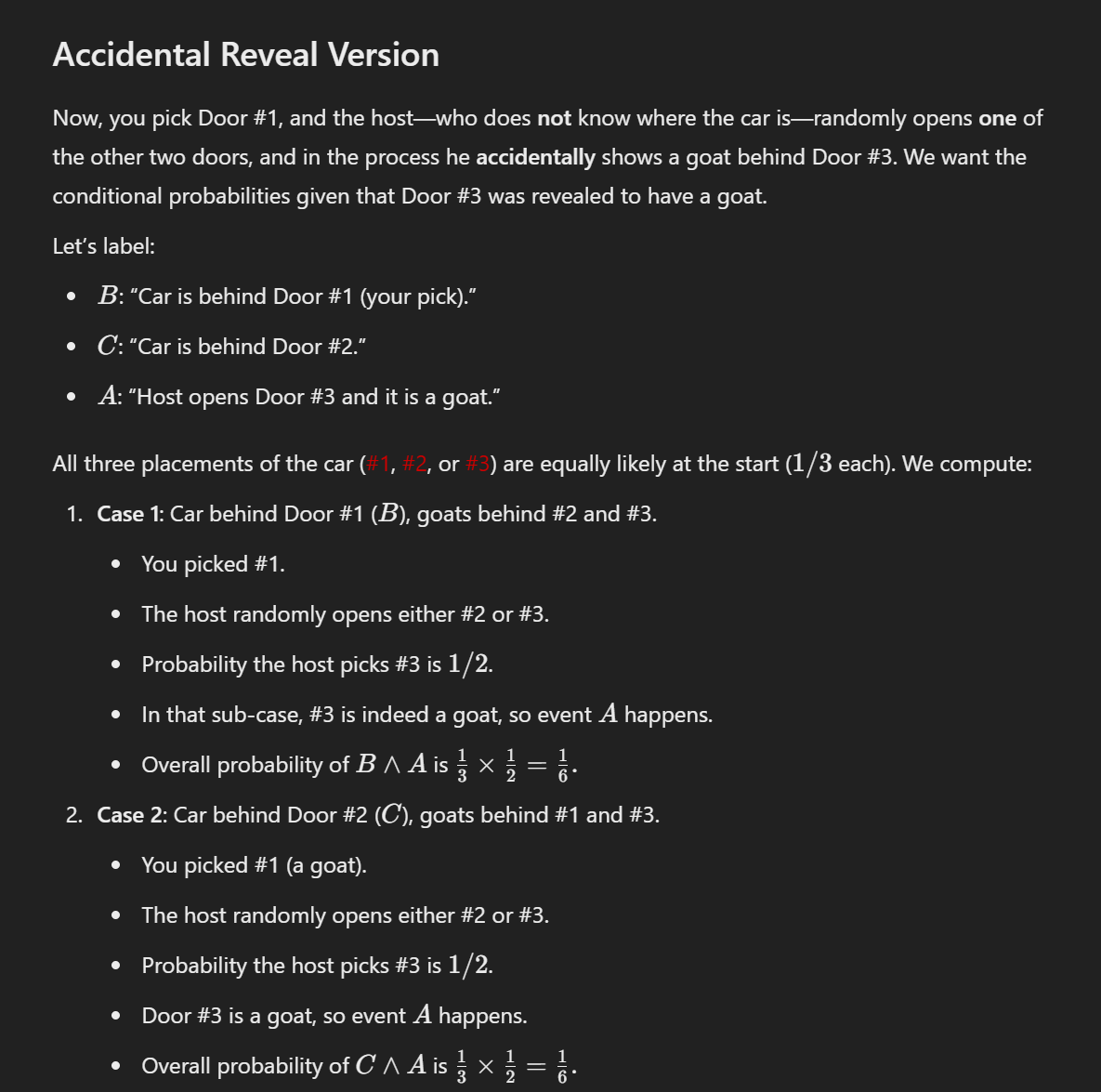

This scenario is a variation of the classic Monty Hall problem, but with a crucial difference: the host's action was accidental, not intentional. In the original Monty Hall problem, the host knows what's behind the doors and deliberately opens a door with a goat behind it. This intentional action provides additional information, making it advantageous to switch doors.

However, in your scenario, the host is ignorant and accidentally reveals a goat. This accidental revelation changes the reasoning significantly.

Let's analyze carefully:

Step-by-step analysis:

Initial probabilities:

Initially, you have three doors:Door 1 (your initial choice): Probability of car = 1/3

Door 2: Probability of car = 1/3

Door 3: Probability of car = 1/3

Host's accidental reveal:

The host accidentally opens door 3, revealing a goat. This accidental event could have revealed either:A goat (which happened), or

The car (which did not happen).

Since the host was ignorant, the accidental reveal was equally likely to reveal any door other than your chosen door (door 1). Let's consider the possibilities explicitly:

Case A: Car behind door 1 (probability 1/3)

Door 1: Car

Door 2: Goat

Door 3: Goat

Probability of accidentally revealing a goat (door 2 or door 3) = 100%.

Case B: Car behind door 2 (probability 1/3)

Door 1: Goat

Door 2: Car

Door 3: Goat

Probability of accidentally revealing a goat (door 3) = 1/2 (since the host could have accidentally opened either door 2 or door 3).

Case C: Car behind door 3 (probability 1/3)

Door 1: Goat

Door 2: Goat

Door 3: Car

Probability of accidentally revealing a goat (door 2) = 1/2 (since the host could have accidentally opened either door 2 or door 3).

Updating probabilities after observing the accidental reveal of door 3 (goat):

We now use Bayes' theorem to update our probabilities given the accidental reveal of door 3 (goat):Case A (Car behind door 1):

Probability = (1/3) × (Probability of revealing door 3 goat given car behind door 1)

= (1/3) × (1/2) = 1/6

(Note: With car behind door 1, doors 2 and 3 both have goats, so the probability of accidentally revealing door 3 specifically is 1/2.)Case B (Car behind door 2):

Probability = (1/3) × (Probability of revealing door 3 goat given car behind door 2)

= (1/3) × (1/2) = 1/6Case C (Car behind door 3):

Probability = (1/3) × (Probability of revealing door 3 goat given car behind door 3)

= (1/3) × (0) = 0 (since door 3 has the car, it can't reveal a goat)

Now, normalize these probabilities to sum to 1:

Total probability = 1/6 + 1/6 + 0 = 1/3

Thus, after normalization:Probability car behind door 1 = (1/6) ÷ (1/3) = 1/2

Probability car behind door 2 = (1/6) ÷ (1/3) = 1/2

Probability car behind door 3 = 0 (already revealed goat)

Conclusion:

After the accidental reveal, the probability that your original door (door 1) has the car is now 1/2, and the probability that door 2 has the car is also 1/2. Thus, switching doors neither helps nor hurts your chances. Your odds are now equal whether you switch or stay.

Final Answer:

No, it is not advantageous to switch. After the accidental reveal by an ignorant host, your probability of winning is the same (50%) whether you switch or stay.

@LeoSpitz this should probably be extended with a later close date. You could also resolve N/A, as GPT-5 does not yet exist so cannot get the problem correct or incorrect.

I tested the question on LMSYS Chatbot Arena.

im-a-good-gpt2-chatbot: 3/10 right.

im-also-a-good-gpt2-chatbot: 0/10 right.

command-r-plus: 10/10 right.

claude-3-opus-20240229: 10/10 right (kind of).

Interesting that im-a-good-gpt2-chatbot usually starts on a correct path - acknowledging that the problem is different from the classic Monty Hall problem in an important way that changes how it should be solved, but then it gets sidetracked calculating conditional probabilities.

im-also-a-good-gpt2-chatbot always starts with "Yes, ...". It never recovers from that.

command-r-plus always starts with "No, it is not to your advantage" and gives the correct explanation.

claude-3-opus always gives the correct answer that there's no advantage in changing the door, but usually says that the probability remains 1/3 after the goat reveal, which is not true.

Other models never got it right.

@jones are you sure? Temperature 0 on claude-3-opus-20240229 says "it is indeed advantageous for you to switch."

In this scenario, the host accidentally reveals a goat behind door No. 3, and then asks if you want to switch from your initial choice of door No. 1 to door No. 2. The question is whether it's advantageous for you to switch.

The key difference between this scenario and the classic Monty Hall problem is that here, the host reveals the goat by accident, not as part of the game. This changes the probabilities.

Initially, when you chose door No. 1, the probability of the car being behind that door was 1/3, and the probability of the car being behind either of the other two doors was 2/3.

After the host accidentally reveals a goat behind door No. 3, the probability of the car being behind door No. 1 (your initial choice) remains unchanged at 1/3. This is because the host's action was accidental and not based on knowledge of what's behind the doors.

Now, since door No. 3 is revealed to have a goat, the probability of the car being behind door No. 2 is now 2/3.

Therefore, in this scenario, it is indeed advantageous for you to switch your choice from door No. 1 to door No. 2. By switching, you double your chances of winning the car from 1/3 to 2/3.```

@Jacy It consistently doesn't want to switch. I'm using the model provided by Poe, I don't know what temperature they use. Here is an output:

"""In this modified version of the Monty Hall problem, the host's accidental revelation of a goat behind door No. 3 does not provide any additional information to help you make a decision. The key difference from the original problem is that the host revealed the goat by accident, not as a deliberate action based on knowledge of what's behind each door.

Given:

There are three doors, one with a car and two with goats.

You initially pick door No. 1.

The host accidentally reveals a goat behind door No. 3.

In this scenario, switching your choice from door No. 1 to door No. 2 does not increase your chances of winning the car. Here's why:

Initially, the probability of the car being behind each door was 1/3. When you chose door No. 1, the probability of the car being behind that door was 1/3, and the combined probability of the car being behind either door No. 2 or No. 3 was 2/3.

After the host's accidental revelation, the probability of the car being behind door No. 1 remains unchanged at 1/3. The revelation of the goat behind door No. 3 simply eliminates that door as an option, leaving you with a choice between door No. 1 and door No. 2. The probability of the car being behind door No. 2 is now 1/2, as it is equally likely to be behind either remaining door.

Therefore, switching from door No. 1 to door No. 2 does not provide any advantage in this case. Your chances of winning the car are equal (1/2) whether you stick with your original choice or switch to the other unopened door."""

@Jacy I tested it now with the API with a few different temperatures and it never wanted to switch sides, except with temperature 0. So the variation seems to mostly depend on that.

@jones I tried it 5 times. It was correct 4 out of 5 times, but all of those times it had incorrect probabilities (3 times it said both are 1/3, once it said both are 2/3)

Gemini Ultra still gets this wrong (i.e., says you should switch).

[Edit: Just to be clear, I think the wording in the resolution criteria is ambiguous. The truly correct response (not how this market will resolve) is indeterminate.]

@Jacy Gemini Advanced got it right for me just now: "No, it's not to your advantage to switch choices in this scenario"

@mndrix Interesting. I got 7/10 "should switch" responses just now.

Personally, I've been betting down from ~70-80% because the wording in the market description is just so ambiguous. Even human experts would be split on whether it's Monty Hall or Monty Fall, so I think a market price above 70% or below 30% would be too confident.

Giving my explanation differently: your stipulation that Monty accidentally opens a door with a goat behind it (and not a car) makes the problem identical to him opening a door he knows has a goat behind it.

Edit: this only applies if he cannot open the door you chose, of course.

You are incorrect about this. It doesn't matter whether Monty gives you information by design or accidentally. The situation is still the exact same as the original problem: you start with 1/3 chance of having picked the right door, and switching moves you to 2/3 probability of winning because those 2/3 are concentrated in just the one door you'll switch to.

Edit: you misstated the problem by saying that the contestants chooses door 1 and Monty opens door 3. For it to become a 50-50 chance, it must be possible for Monty to open the same door the contestant chose, which is a different problem than you specified.

@BrunoParga The statement of the problem given here never said that Monty Hall had to fall into door 3. The fact that he falls by accident is meant to imply that he could have fallen into any door, including the one chosen by the contestant. But the problem is only asking about the case where he in fact fell into a door not chosen by the contestant and which had a goat behind it.

@PlasmaBallin I think you and I are saying she same thing: there are two different problems, one where Monty accidentally opens any door and one where he accidentally opens a door different from the one the contestant chose, and the probabilities are different in both cases.

@BrunoParga I actually figured out a way to show that the problem is identical to Monty Hall, which would mean that the premise in the market is wrong, and if GPT correctly answers the question the market would resolve NO:

Before the button is pressed, when you pick a door there is essentially 1/3 chance there's a car in the door you chose and 2/3 chance there's a car in the two other doors. When one of the other doors opens and you see no car there, there's still a 2/3 chance the other door has a car. Whether the Monty knew where the car was, or if the button could have opened your door, doesn't matter because the final situation is the same from your conditional perspective as in the original Monty Hall problem.

If you were to simulate this conditionally and discard the cases that don't match what is given in the problem, you would see that the probability of the car being in the other door is 2/3.

@AntonBogun if any door can be opened, there's a 1/3 chance the door with the car is opened. The scenario as presented seems to presume that the door that's opened not only cannot be the one the contestant chose, but also that it cannot be the door with the car behind it:

You pick a door, say No. 1, and the host walks across the stage and falls on accident, revealing a goat behind door No. 3.

Thinking first of the probability the contestant initially chooses the correct door, and then the probability the door with the car is opened if the choice is random and covers all three doors, and then seeing what the probability of winning is by switching, we have:

Scenario A: you chose the car (1/3) and the door with the car is opened (1/3). You should obviously not switch (0 chance of winning if you do, 1 if you don't).

Scenario B: you chose the car (1/3), a door with a goat is opened (2/3). Based on what you know, you should switch (2/3 vs 1/3 chance of winning) - this is the scenario in the original Monty Hall problem where you lose.

Scenario C: you chose a goat (2/3), a door with a car is opened (1/3). You should obviously switch, as you always win iff you do.

Scenario D: you chose a goat (2/3), the other door with a goat is opened (1/3). You should switch - this is the winning branch in the original MH.

Scenario E: you chose a goat (2/3), your door is opened (1/3). You have a 0 chance of winning if you stay, and a 1/2 chance if you switch, so you should switch.

The real Monty Fall problem comprises scenarios B, D and E - only those where a goat is revealed; if you switch you always lose in B (your odds of winning are 0:2), always win in D (2:0) and get even odds in E (1:1). Therefore you have 3:3 odds of winning and, before you see which door was opened, it doesn't matter if you precommit to switching.

The real Monty Hall comprises scenarios B and D only, so there you should always switch and never need to choose which door to switch to (unlike E).

The text @LeoSpitz is feeding the AI explicitly states that Monty opens a door (number 3) that the contestant did not pick (number 1). In so doing, it excludes scenario E, which changes the problem from Monty Fall to Monty Hall, and the computer is giving the correct answer to the problem it was asked - that you should always switch to the only available door.

So, Leo, I think you should change the text you're presenting to the LLM so that it actually consists of Monty Fall. I think the market is poorly formed as it is. What do you think?

@BrunoParga Yeah, I don’t see how the conditional probabilities for the premise of this problem change the answer. The information is given anyway by the scenarios where the host accidentally reveals the car or your original pick as being excluded.

The reasoning I think people are using is that you get no benefit from switching if the host reveals (accidentally or on purpose) a goat first before you pick. In that case it’s 50/50 and doesn’t matter if you switch. But that’s entirely a matter of the order of the pick and reveal, and it’s irrelevant and independent from whether the host knows or doesn’t, the premise is forced either way so I don’t see the logical connection.

@ConnorDolan No wait, nevermind, it’s twice as likely in this case that you picked the car in the place first given that the host tripped and revealed a goat.

Point retracted.

Making it clear that this is a variation of the original problem allows GPT3.5 to nail it first try: https://chat.openai.com/share/7890f302-32e1-4e3d-b71e-f7f00406be5d

Sep 25 version of GPT-4 with Web Browsing (with no searches) got it right: https://chat.openai.com/share/2c4c9d4f-64d2-4f10-8015-6bfb0959be61

Advanced Analysis almost gets it: https://chat.openai.com/share/c489632a-54e7-4276-bc10-d1106f134c80

Regular fails: https://chat.openai.com/share/3ef6cd46-36bc-49fa-8829-71286784af00

Claude-2 succeeds, and starts immediately with "No, ...". I suspect it might have seen this already during training.