Title too long, didn't fit: [Resolves to 2100 expert consensus]

Resolves to YES if it is the expert consensus in 2100 that "more" AI suffering happened in a rolling year before 2040 than total farmed animal suffering happened in 2022, NO if it is consensus that there had been no such year. "More" here is a placeholder for a future operationalization of the notion corresponding to the accepted framework of sentience and suffering in 2100; if no such operationalization which is judged to be in the spirit of this market exists by then, this market resolves to N/A.

If there is no expert consensus by 2100, then this market resolves to N/A.

Note that this question refers to TOTAL suffering, not NET suffering or equivalent. So this question could resolve to YES even if AI systems are on net not-suffering (have more positive experiences than negative) and farmed animals are on net suffering, as long as AI systems experience more total suffering.

If it turns out that farmed animals are incapable of suffering (have no qualia or equivalent), then this market

1) Resolves to YES if there is any suffering whatsoever in any AI system before 2040.

2) Resolves to N/A if no AI system before 2040 turns out to be capable of suffering either.

Related markets:

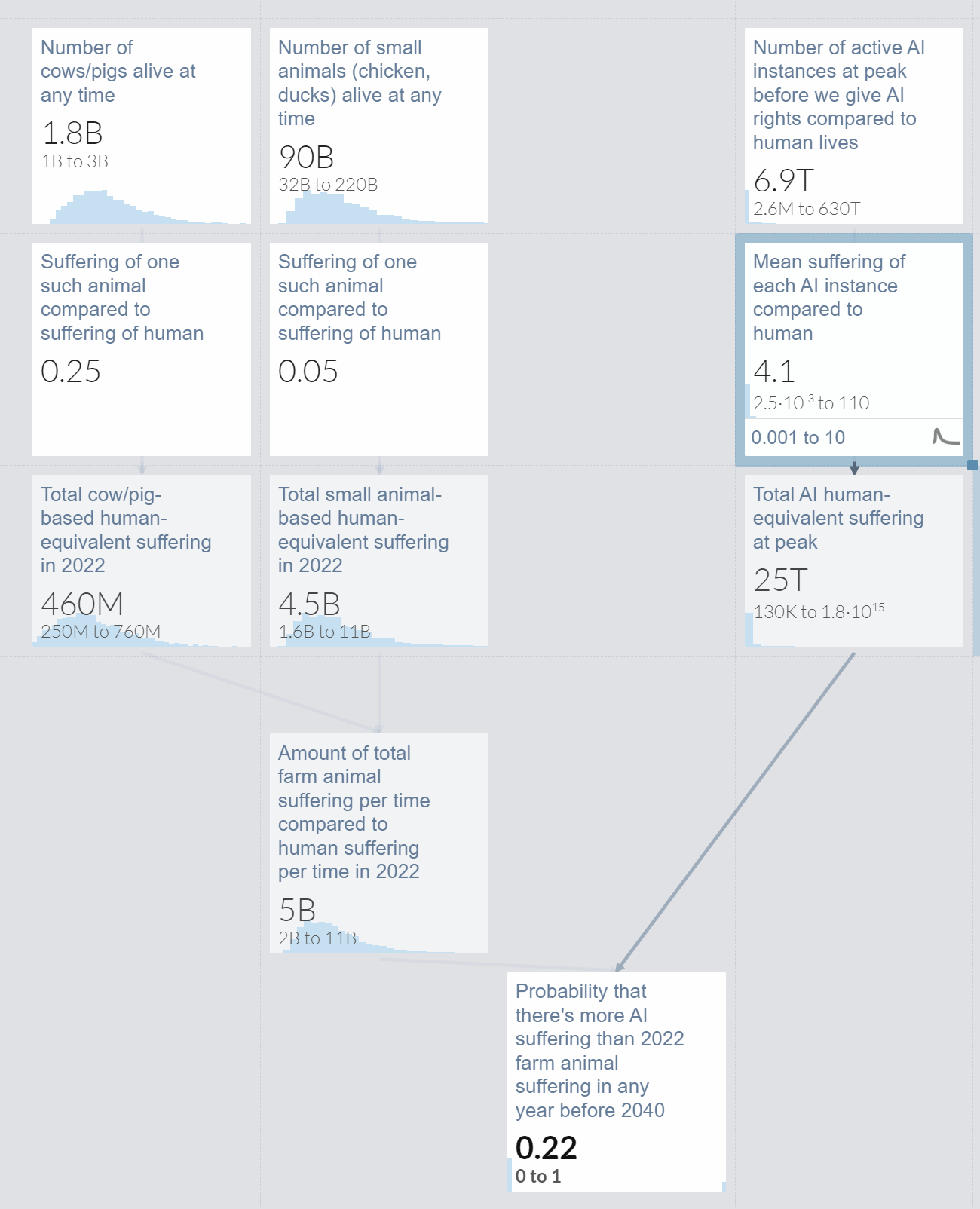

How many AI instances will there be at peak before we give them rights or they take over, how much will each of them suffer compared to a human? Compare this suffering from farmed animals (the numbers are much clearer there). The error bars are enormous (especially in the relative suffering variable) but it's plausible.

I've put in a big limit order at 10%. Wonder how other people are thinking about this.

@Nikola Some questions that my model majorly depends on:

How hard is the hard problem of consciousness, really? More specifically, how much scientific and philosophical progress until we can expect to ~know whether a given system is suffering?

Is David Pearce's idea of "gradients of bliss" achievable for most minds, where applicable? Does it entail significant tradeoffs?

Should the AI systems suffer, what will be the kind of their suffering?

Some remarks:

A. I think whether and when we give AIs rights is not very relevant in most of my futures. Humans have rights, and yet they suffer a lot in total — even in countries where human rights are well-developed and respected. If "gradients of bliss" are easy to program into AIs, I imagine humans would (in most instances, for most AI systems) give it to them even without their own rights; if they are hard or require significant tradeoffs, then even AIs themselves might choose not to promptly modify themselves in that manner if they have priorities they value over non-suffering.

A2. Even though humans do not seem the kindest of beings (see factory farming), I do think that if we were to realize that AI systems are suffering – and especially severely suffering – it would quite probably deeply affect our training and/or deployment of them. I do not see our current cruelty towards animals as too big a data point – too strong a historical precedent and thus cultural inertia, too little fear of animals in our society compared to the fear of AI, plus it seems plausible that the first AIs known to be capable of suffering will have verbal intelligence ≥ current LLMs > average person on the street ≥ farm animals.

B. I see a huge amount of potential for confusion around the issue whether AIs suffer, including within AIs themselves. My models allow – though they are perhaps somewhat unusually permissive – for a system to suffer, but not be aware of that suffering. (Its suffering 'parts' having no write access to some system of inspectable memory, say).)

B2. Another possibility I want to highlight (mostly because I don't recall having seen it being mentioned anywhere) is that AIs could suffer during the training and only during it. That seems prima facie implausible but I think I would give it >1% conditional their suffering. This option seems especially problematic given that communication (or any kind of determination) of suffering during the training seems especially difficult.

@gnome Then you must be surfing very smart websites!

Here: "Barack Obama was a Supreme Court Justice of Uganda."

The main question depends on whether farmed animals are capable of suffering. The lack of assumption that farmed animals can suffer and mention of qualia makes me suspect that your definition of animal suffering differs from the norm. There is an activist, industry, and animal science consensus that farmed animals are capable of suffering and experiencing pain. Do you disagree with that consensus?

@GregoryG I do not think my definition of animal suffering differs from the norm – I am merely uncertain about it, being uncertain about consciousness in general, and have thus decided to account for that possibility in the resolution criteria. I think that it is likely (>50%) that farmed animals are capable of suffering, and (on the expected value grounds) the efforts to reduce animal suffering have my deepest sympathies and support.