ARIA's Safeguarded AI programme is based on davidad's programme thesis, an ambitious worldview about what could make extremely powerful AI go well. ARIA aims to disperse £59m (as of this writing) to accomplish these ambitions.

https://www.aria.org.uk/wp-content/uploads/2024/01/ARIA-Safeguarded-AI-Programme-Thesis-V1.pdf

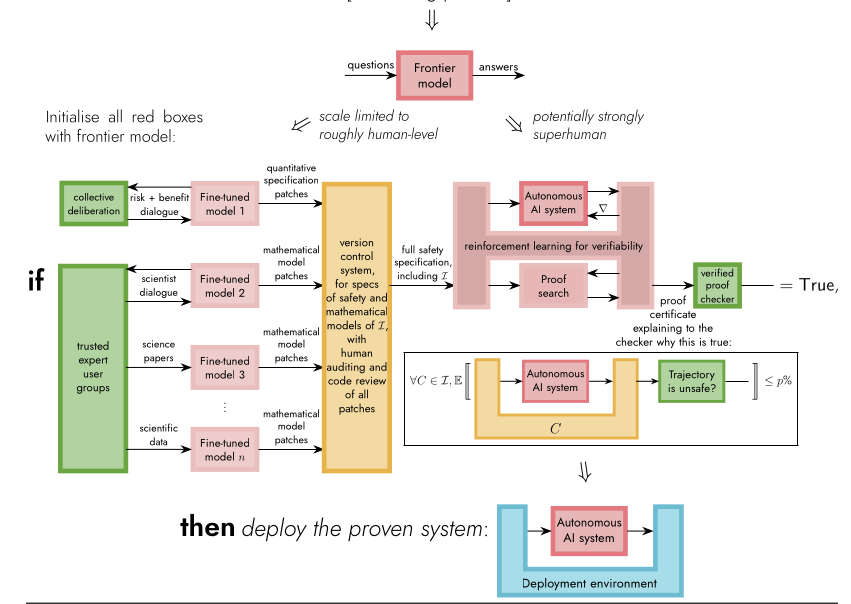

The Safeguarded AI agenda consists of world modeling and proof certificates to create gatekeepers, quantitative computations which simulate an action being taken and only pass it along to meatspace if it is safe up to a given threshold.

"the real world" means as a quality assurance procedure in an actual industry with actual customers.

Does not resolve yes if something seems kinda like a gatekeeper but makes no reference to the ARIA programme.

Does not resolve yes if rumors are circulating about a project behind closed doors, so it must be from a company that's reasonably public. Do comment if you think it's a viable strategy but will likely only be deployed in secret military contexts.

@RafaelKaufmann Yes, provided that there's a public comment from creators to the effect that they were influenced by davdad-like ideas.

Does resolving YES depend in any way on how advanced the AI being gated is? I can see weak versions being gainfully deployed in scenarios where failure wouldn't be catastrophic, like some basic industrial automation setup. Or, you know, at the limit, a thermostat. Wouldn't speak much to the viability of the approach towards reducing existential risk though.

@agentofuser no I don't think that matters. Simple ordinary QA procedure in not necessarily catastrophic contexts is sufficient to resolve yes