Will async SGD become the main large-scale NN optimization method before 2031?

Mini

3

Ṁ1212031

83%

chance

1D

1W

1M

ALL

Resolves as YES if asynchronous stochastic gradient descent becomes dominant in large scale neural network optimization before January 1st 2031.

Get 1,000and

1,000and 1.00

1.00

Related questions

Related questions

Will the largest AI training run in 2025 utilize Sophia, Second-order Clipped Stochastic Optimization?

4% chance

Will we see a public GPU compute sharing pool for LLM model training or inference before 2026 ?

82% chance

Will the largest machine learning training run (in FLOP) as of the end of 2025 be in the United States?

91% chance

Will the state-of-the-art AI model use latent space to reason by 2026?

3% chance

100GW AI training run before 2031?

33% chance

Will we see the emergence of a 'super AI network' before 2035 ?

72% chance

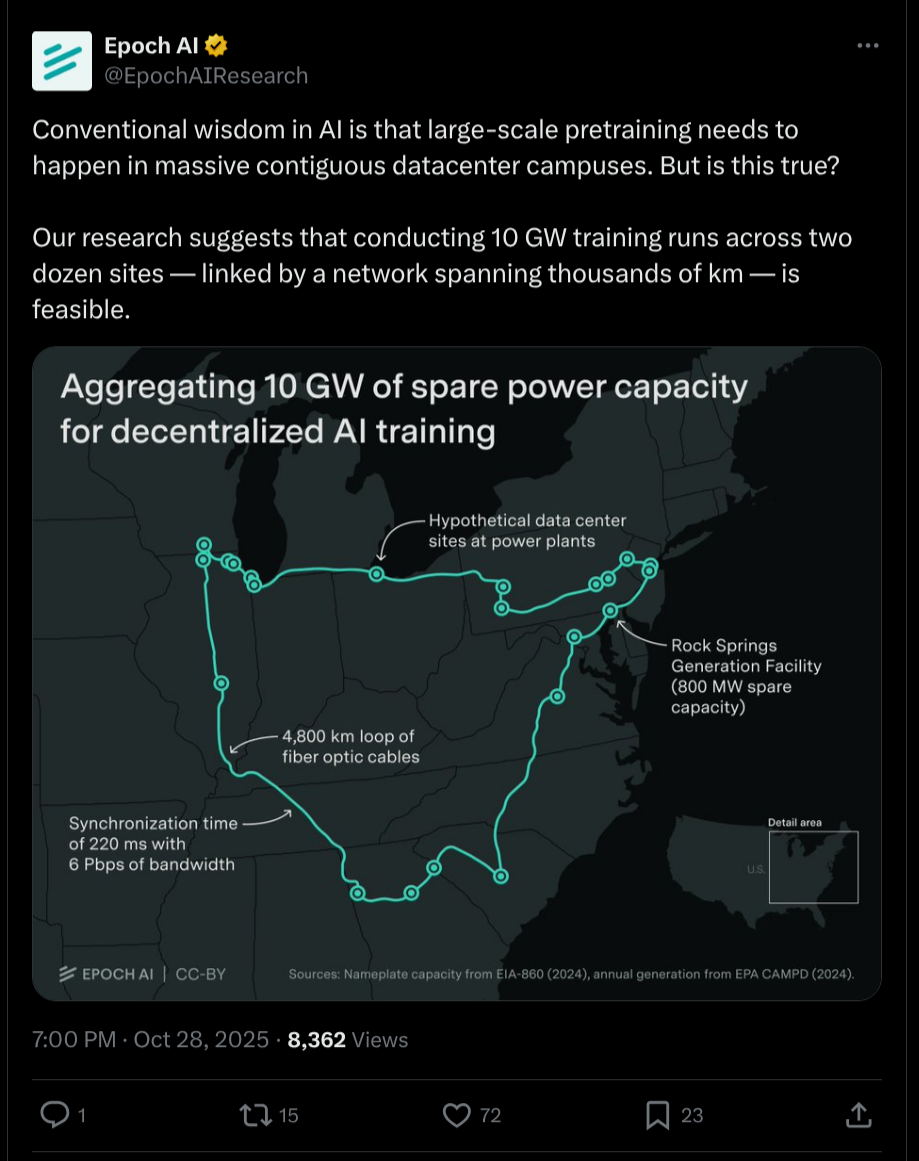

10GW AI training run before 2029?

20% chance

1GW AI training run before 2027?

75% chance

By the time we reach AGI, will backpropagation be used in its weights optimization?

61% chance

What will be the parameter count (in trillions) of the largest neural network by the end of 2030?