Will an LLM with less than 10B parameters beat GPT4 by EOY 2025?

Mini

15

Ṁ18122026

98%

chance

1D

1W

1M

ALL

How much juice is left in 10B parameters?

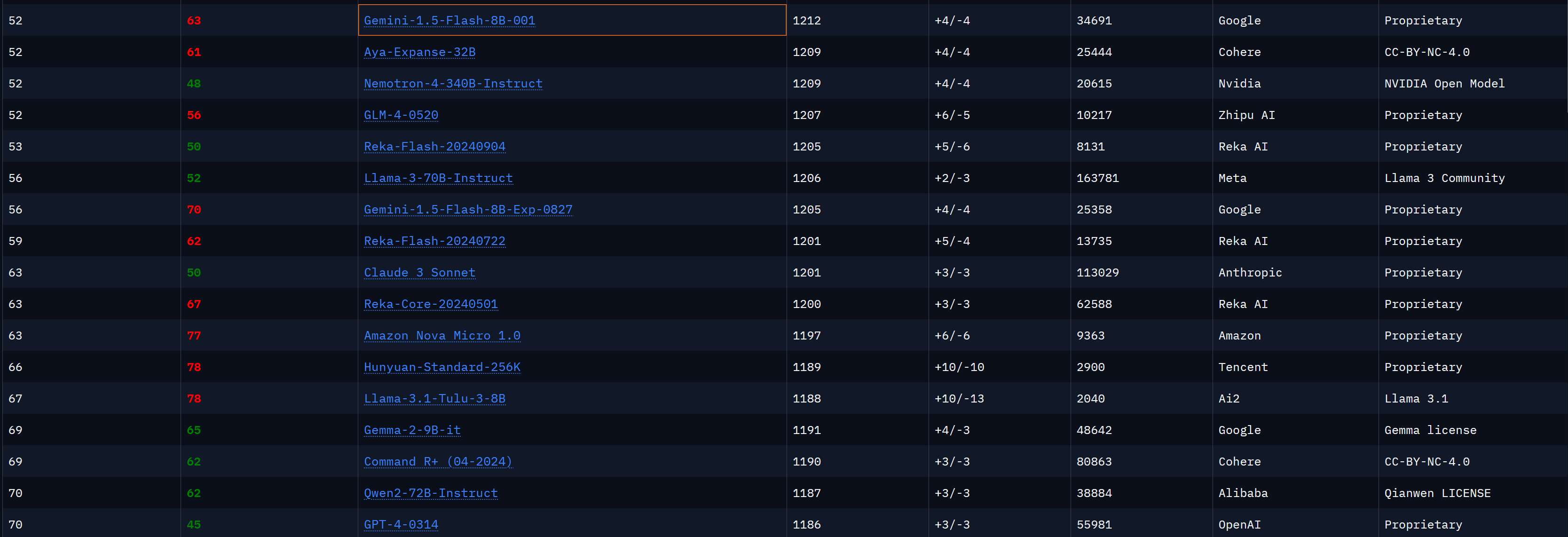

the original GPT4-0314 (ELO 1188)

judged by Lmsys Arena Leaderboard

current SoTA: Llama 3 8B instruct (1147)

Get 1,000and

1,000and 1.00

1.00

Sort by:

bought Ṁ500 YES

@mods Resolves as YES.. The creator's account is deleted, but Gemini 1.5 Flash-8B easily clears the bar (ELO higher than 1188) with an ELO of 1212, see https://lmarena.ai/

@singer There is a without refusal board on lmsys. The disparity between main and that is how much advantages not having censorship’s give u

Related questions

Related questions

an LLM as capable as GPT-4 runs on one 4090 by March 2025

31% chance

an LLM as capable as GPT-4 runs on one 3090 by March 2025

33% chance

Will there be a OpenAI LLM known as GPT-4.5? by 2033

72% chance

Will the best LLM in 2025 have <1 trillion parameters?

38% chance

Will there be an LLM (as good as GPT-4) that was trained with 1/100th the energy consumed to train GPT-4, by 2026?

82% chance

Will the best LLM in 2026 have <1 trillion parameters?

40% chance

Will the best LLM in 2027 have <1 trillion parameters?

26% chance

Will the best LLM in 2025 have <500 billion parameters?

25% chance

Will the best LLM in 2026 have <500 billion parameters?

27% chance

Will an open-source LLM beat or match GPT-4 by the end of 2024?

83% chance