ARIA's Safeguarded AI programme is based on davidad's programme thesis, an ambitious worldview about what could make extremely powerful AI go well. ARIA aims to disperse £59m (as of this writing) to accomplish these ambitions.

https://www.aria.org.uk/wp-content/uploads/2024/01/ARIA-Safeguarded-AI-Programme-Thesis-V1.pdf

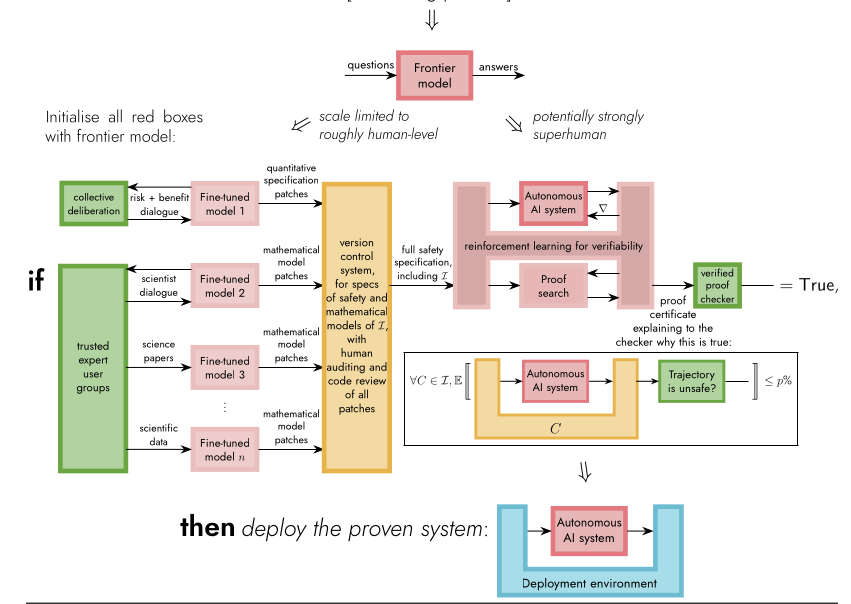

The Safeguarded AI agenda consists of world modeling and proof certificates to create gatekeepers, quantitative computations which simulate an action being taken and only pass it along to meatspace if it is safe up to a given threshold.

Inclusive of toy problems and research prototypes.

Does not resolve yes if something seems kinda like a gatekeeper but makes no reference to the ARIA programme.

@RafaelKaufmann Yes, provided that the ARIA programme or davidad-like ideas are cited in at least a "related work" section